Web scraping is a powerful technique that involves extracting information from the web. It is not a new technique, but it has become increasingly popular over the past few years. In this article, I will walk you through the process of scraping websites using Python.

I will start by giving a broad overview of web scraping, and then will cover some of the basics of web scraping. Finally, I will walk you through the steps of scraping a website.

Web scraping, also known as screen scraping or web harvesting is one of the most important skills for data analysis, predictive analytics, and machine learning.

Why we Scrape the Web

There’s so much information on the Internet, and it's growing very fast. People scrape the web for many reasons and here are a few of those:

-

Extracting the latest news from a news publication website

-

Monitoring price changes online

-

gathering real estate listings

-

weather data monitoring,

-

research

-

Extracting a large amount of data for data mining, etc

It all boils down to what data you want to get or track on the web.

Web Scraping with Python

There many tools and programming languages for scraping content on the web, but Python provides a smooth and simple process. Web scraping can be done in python with libraries such as Requests, BeautifulSoup, Scrapy and Selenium.

BeatifulSoup is the best bet when starting web scraping in python, hence we will be using this BeautifulSoup4 and the Python Request library for scraping in this tutorial.

Not all websites allow for their contents to be scraped, so you should check the website's policies before scraping.

The Scraping Process

There are just three steps in scraping a webpage:

- Inspecting the webpage

- Fetching the webpage.

- Extracting from it. Simple.

Case Study: Scraping News Headlines

www.technewsworld.com provides the latest news from the tech industry, we will be scraping the latest news headlines from their homepage.

1. Inspecting data source

Head's up, you should have some basic knowledge of HTML. To inspect the technewsworld webpage, first, visit www.technewsworld.com in your browser then press CTRL + U to view the webpage's source code. The source code viewed is the same code we will be scraping our data from.

With some basic knowledge in HTML, you can analyze the source code and find the HTML divisions or elements which contain some data like news headlines, news overview, article's date, etc.

2. Fetching the webpage

To scrape information from a webpage, you must first fetch (or get or download) the page. But note that computers don't see webpages as we humans do i.e the beautiful layouts, colors, fonts, etc.

Computers see and understand webpages as code, i.e the source code we see when we "view source code" in a browser by pressing CTRL + U as we did when inspecting the webpage.

To fetch a webpage using python, we will use the requests library which you can install by:

pip install requests

To fetch the webpage using the requests library,

import requests

# fetching webpage

r = requests.get("https://www.technewsworld.com/")

print(r.status_code) # 200

print(r.content) # prints html source code

The variable r contains the response we get after sending a request to "https://www.technewsworld.com/".

r.status_code returns a response code indicating if the request was successful or not. A status_code of 200 indicates the request was successful, 4** means a client error (an error on your side) and 5** means a server error.

r.content returns the content of the response which is the webpage's source code and it is the same source code that is available to you when you view it in a web browser.

3. Parsing the webpage's source code with Beautiful Soup

After we have fetched the webpage and have access to its source code, we will have to parse it using beautiful Soup.

Beautiful Soup is a Python library for pulling data out of HTML and XML files, we are going to use it the extract all data from our HTML source code.

To install beautifulsoup,

pip install beautifulsoup4

Before we do any extraction, we have to parse the html we've got

import requests

from bs4 import BeautifulSoup # import beautifulsoup

# fetching webpage

r = requests.get("https://www.technewsworld.com/")

# parsing html code with bs4

soup = BeautifulSoup(r.content, 'html.parser')

The BeautifulSoup() class requires two arguments, the page HTML source code which is stored in r.content and an html parser.

html.parser is a simple html parsing module built into python and BeautifulSoup requires it for parsing the r.content(the html source code)

3.1 Extracting Page Title and Body

After parsing with beautiful soup, the parsed html is stored in the soup variable which forms the base of all extraction we are going to do. Let's start by retrieving the page title, head and body elements:

import requests

from bs4 import BeautifulSoup

# Fetching webpage

r = requests.get("https://www.technewsworld.com/")

# parsing html code with bs4

soup = BeautifulSoup(r.content, 'html.parser')

# Extracting the webpage's title element

title = soup.title

print(title) # <title>...</title>

# Extract the head

head = soup.head

print(head) # <head>...</head>

# Extract the body

body = soup.body

print(body) # <body>...</body>

soup.title returns the title element of the webpage in html format(<title>...</title>). Similarly soup.head and soup.body returns the head and body elements of the webpage.

3.2. Finding HTML elements

Scraping only the title, head and body elements of the webpage still gives us too much-unwanted data, we only want some specific data from the HTML body, as such finding specific html elements like <div>, <a>,<p>, <footer>, <img>, etc.

The goal is to scrape out news headlines from the webpage. As we did the inspection, you did notice that the news articles were kept in divisions in the <div> tags. Let's see if it will be useful to find all div elements in the webpage:

import requests

from bs4 import BeautifulSoup

# fetching webpage

r = requests.get("https://www.technewsworld.com/")

print(r.status_code)

# parsing html code with bs4

soup = BeautifulSoup(r.content, 'html.parser')

# finding all div tags in the webpage

div_elements = soup.find_all('div')

print(div_elements) # this returns a list of all div elements in webpage

Finding all div elements is one step closer to the goal but we still need to be more specific and find only the div elements with the data we need.

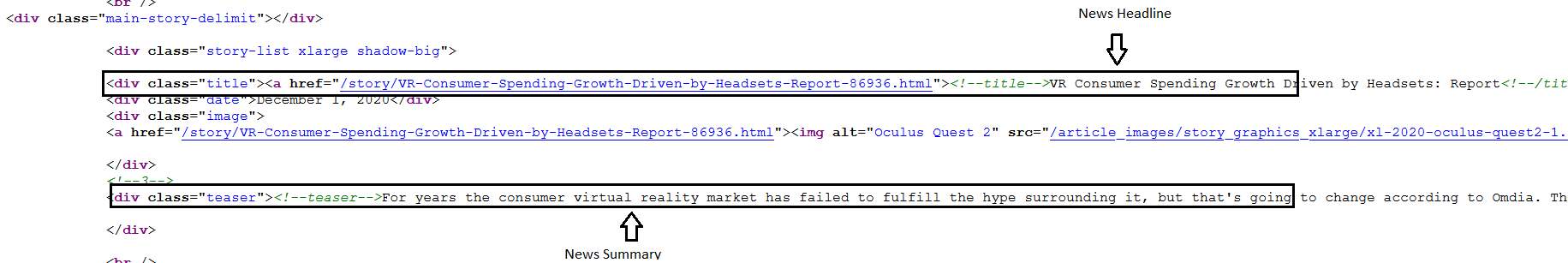

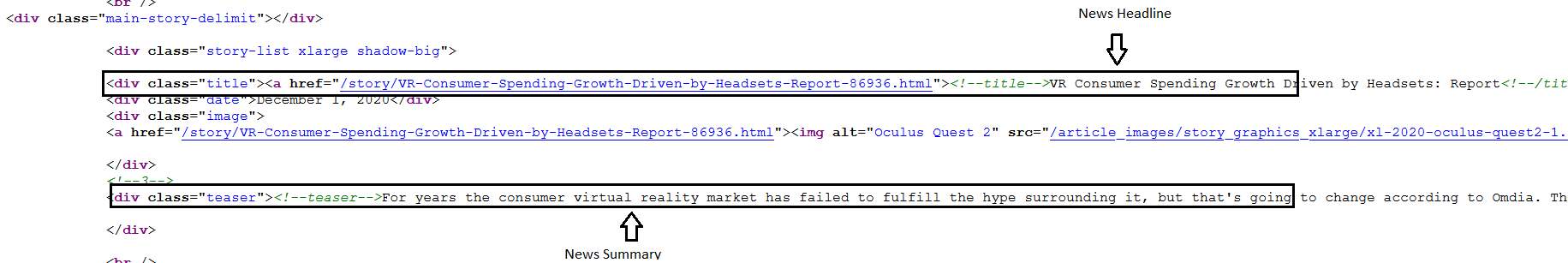

As you can see above, the news headlines are in div elements with class name of 'title' i.e <div class='title'><a href="..">......</a></div>.

So we will need to find only div elements with class="title".

3.3. Finding HTML elements by class name.

To find all div elements with a class of "title",

import requests

from bs4 import BeautifulSoup

# Fetching webpage

r = requests.get("https://www.technewsworld.com/")

print(r.status_code)

# parsing html code with bs4

soup = BeautifulSoup(r.content, 'html.parser')

# finding all div tags with class = 'title'

div_title = soup.find_all('div', class_="title")

soup.find_all('div', class_="title") looks for all div elements with class="title" (<div class="title">) and returns a list of them.

Next, we iterate over the returned list and extract all the <a> elements from them since it contains the text we are looking for.

import requests

from bs4 import BeautifulSoup

# Fetching webpage

r = requests.get("https://www.technewsworld.com/")

# parsing html code with bs4

soup = BeautifulSoup(r.content, 'html.parser')

# finding all div tags with class = 'title'

div_title = soup.find_all('div', class_="title")

# Extracting the link from the div tags

for element in div_title:

a = element.find('a') # finds all a elements in the titles

print(a)

output

<a href="/story/Machine-Learning-Is-Changing-the-Future-of-Software-Testing-86939.html"><!--title-->Machine Learning Is Changing the Future of Software Testing<!--/title--></a>

<a href="/story/Facebooks-Digital-Currency-Renamed-Diem-86938.html"><!--title-->Facebook's Digital Currency Renamed Diem<!--/title--></a>

<a href="/story/VR-Consumer-Spending-Growth-Driven-by-Headsets-Report-86936.html"><!--title-->VR Consumer Spending Growth Driven by Headsets: Report<!--/title--></a>

<a href="/story/Tech-Gift-Ideas-to-Help-Tackle-Your-Holiday-Shopping-List-86934.html"><!--title-->Tech Gift Ideas to Help Tackle Your Holiday Shopping List<!--/title--></a>

<a href="/story/Snaps-Spotlight-Ups-Ante-on-TikTok-With-1M-Daily-Fund-for-Top-Videos-86932.html"><!--title-->Snap's Spotlight Ups Ante on TikTok With $1M Daily Fund for Top Videos<!--/title--></a>

<a href="/story/The-Best-Hybrid-Mid-Range-SUV-Might-Surprise-You-86929.html"><!--title-->The Best Hybrid Mid-Range SUV Might Surprise You<!--/title--></a>

<a href="/story/Smart-Device-Life-Cycles-Can-Pull-the-Plug-on-Security-86928.html"><!--title-->Smart Device Life Cycles Can Pull the Plug on Security<!--/title--></a>

<a href="/story/Student-Inventor-Wins-Prize-for-Breast-Cancer-Screening-Device-86927.html"><!--title-->Student Inventor Wins Prize for Breast Cancer Screening Device<!--/title--></a>

<a href="/story/New-Internet-Protocol-Aims-to-Give-Users-Control-of-Their-Digital-Identities-86924.html"><!--title-->New Internet Protocol Aims to Give Users Control of Their Digital Identities<!--/title--></a>

<a href="/story/DevSecOps-Solving-the-Add-On-Software-Security-Dilemma-86922.html"><!--title-->DevSecOps: Solving the Add-On Software Security Dilemma<!--/title--></a>

<a href="/story/Apples-M1-ARM-Pivot-A-Step-Into-the-Reality-Distortion-Field-86919.html"><!--title-->Apple's M1 ARM Pivot: A Step Into the Reality Distortion Field<!--/title--></a>

<a href="/story/Apple-Takes-Chipset-Matters-Into-Its-Own-Hands-86916.html"><!--title-->Apple Takes Chipset Matters Into Its Own Hands<!--/title--></a>

<a href="/story/Social-Media-Upstart-Parler-Tops-App-Store-Charts-86914.html"><!--title-->Social Media Upstart Parler Tops App Store Charts<!--/title--></a>

<a href="/story/IBM-Microsoft-and-the-Future-of-Healthcare-86911.html"><!--title-->IBM, Microsoft, and the Future of Healthcare<!--/title--></a>

<a href="/story/The-Pros-and-Cons-of-Dedicated-Internet-Access-86909.html"><!--title-->The Pros and Cons of Dedicated Internet Access<!--/title--></a>

We are almost there, we have a list of <a> elements with our news headlines in them. We've got to get them out of the html element (extract the text from the <a> elements)

3.4. Extracting text from HTML elements

To extract text with BeautifulSoup, we use the .text attribute to get the text data from an HTML element.

import requests

from bs4 import BeautifulSoup

# Fetching webpage

r = requests.get("https://www.technewsworld.com/")

# parsing html code with bs4

soup = BeautifulSoup(r.content, 'html.parser')

# finding all div tags with class = 'title'

div_title = soup.find_all('div', class_="title")

# Extracting the link from the div tags

for element in div_title:

a = element.find('a') # finds all a elements in the titles

print(a.text)

output

Machine Learning Is Changing the Future of Software Testing

Facebook's Digital Currency Renamed Diem

VR Consumer Spending Growth Driven by Headsets: Report

Tech Gift Ideas to Help Tackle Your Holiday Shopping List

Snap's Spotlight Ups Ante on TikTok With $1M Daily Fund for Top Videos

The Best Hybrid Mid-Range SUV Might Surprise You

Smart Device Life Cycles Can Pull the Plug on Security

Student Inventor Wins Prize for Breast Cancer Screening Device

New Internet Protocol Aims to Give Users Control of Their Digital Identities

DevSecOps: Solving the Add-On Software Security Dilemma

Apple's M1 ARM Pivot: A Step Into the Reality Distortion Field

Apple Takes Chipset Matters Into Its Own Hands

Social Media Upstart Parler Tops App Store Charts

IBM, Microsoft, and the Future of Healthcare

The Pros and Cons of Dedicated Internet Access

Hurray, we have scraped the latest news headlines from technewsworld.com. Note, your news headlines will be different from mine since the homepage is always being updated with the latest news.

This small program we have written can serve as an automated way to track the latest changes from technewsworld.com, because anytime we run this program, we fetch the latest changes from the homepage.

Comments (0)

Leave a reply